Previous

Create a training dataset

For the best results, use the same camera for both training data capture and production deployment.

You can add images to a dataset directly from a camera or vision component feed in the machine’s CONTROL or CONFIGURATION tabs.

To add an image directly to a dataset from a visual feed, complete the following steps:

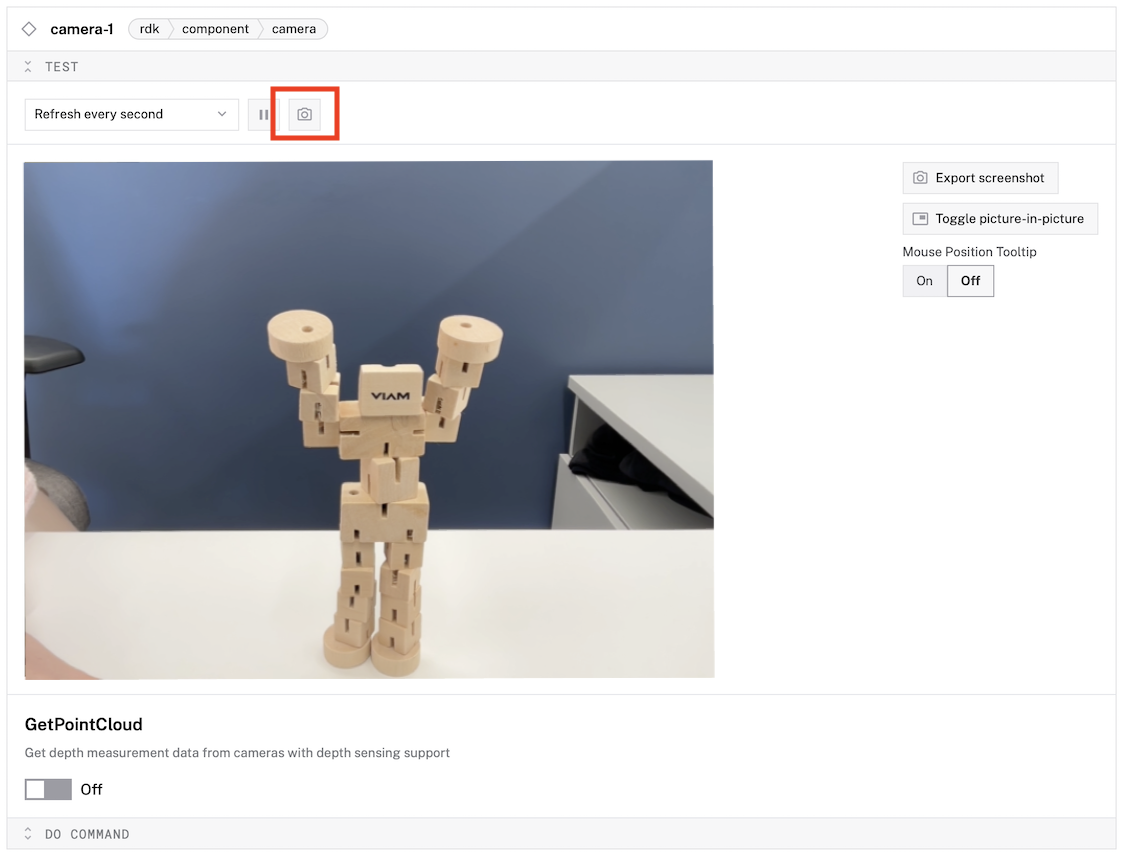

Open the TEST panel of any camera or vision service component to view a feed of images from the camera.

Click the button marked with the camera icon to save the currently displayed image to a dataset:

Select an existing dataset.

Click Add to add the image to the selected dataset.

When you see a success notification that reads “Saved image to dataset”, you have successfully added the image to the dataset.

To view images added to your dataset, go to the DATA page, open the DATASETS tab, then select your dataset.

To capture an image and add it to your DATA page, fetch an image from your camera through your machine.

Pass that image and an appropriate set of metadata to data_client.binary_data_capture_upload:

import asyncio

from datetime import datetime

from viam.rpc.dial import DialOptions, Credentials

from viam.app.viam_client import ViamClient

from viam.components.camera import Camera

from viam.robot.client import RobotClient

# Configuration constants – replace with your actual values

API_KEY = "" # API key, find or create in your organization settings

API_KEY_ID = "" # API key ID, find or create in your organization settings

MACHINE_ADDRESS = "" # the address of the machine you want to capture images from

CAMERA_NAME = "" # the name of the camera you want to capture images from

PART_ID = "" # the part ID of the binary data you want to add to the dataset

async def connect() -> ViamClient:

"""Establish a connection to the Viam client using API credentials."""

dial_options = DialOptions(

credentials=Credentials(

type="api-key",

payload=API_KEY,

),

auth_entity=API_KEY_ID

)

return await ViamClient.create_from_dial_options(dial_options)

async def connect_machine() -> RobotClient:

"""Establish a connection to the robot using the robot address."""

machine_opts = RobotClient.Options.with_api_key(

api_key=API_KEY,

api_key_id=API_KEY_ID

)

return await RobotClient.at_address(MACHINE_ADDRESS, machine_opts)

async def main() -> int:

viam_client = await connect()

data_client = viam_client.data_client

machine_client = await connect_machine()

camera = Camera.from_robot(machine_client, CAMERA_NAME)

# Capture image

image_frame = await camera.get_image()

# Upload image

file_id = await data_client.binary_data_capture_upload(

part_id=PART_ID,

component_type="camera",

component_name=CAMERA_NAME,

method_name="GetImage",

data_request_times=[datetime.utcnow(), datetime.utcnow()],

file_extension=".jpg",

binary_data=image_frame.data

)

print(f"Uploaded image: {file_id}")

viam_client.close()

await machine_client.close()

return 0

if __name__ == "__main__":

asyncio.run(main())

To capture an image and add it to your DATA page, fetch an image from your camera through your machine.

Pass that image and an appropriate set of metadata to DataClient.BinaryDataCaptureUpload:

package main

import (

"context"

"fmt"

"time"

"go.viam.com/rdk/app"

"go.viam.com/rdk/components/camera"

"go.viam.com/rdk/logging"

"go.viam.com/rdk/robot/client"

"go.viam.com/rdk/utils"

"go.viam.com/utils/rpc"

)

func main() {

apiKey := ""

apiKeyID := ""

machineAddress := ""

cameraName := ""

partID := ""

logger := logging.NewDebugLogger("client")

ctx := context.Background()

viamClient, err := app.CreateViamClientWithAPIKey(

ctx, app.Options{}, apiKey, apiKeyID, logger)

if err != nil {

logger.Fatal(err)

}

defer viamClient.Close()

machine, err := client.New(

context.Background(),

machineAddress,

logger,

client.WithDialOptions(rpc.WithEntityCredentials(

apiKeyID,

rpc.Credentials{

Type: rpc.CredentialsTypeAPIKey,

Payload: apiKey,

})),

)

if err != nil {

logger.Fatal(err)

}

// Capture image from camera

cam, err := camera.FromRobot(machine, cameraName)

if err != nil {

logger.Fatal(err)

}

image, _, err := cam.Image(ctx, utils.MimeTypeJPEG, nil)

if err != nil {

logger.Fatal(err)

}

// Upload image to Viam

dataClient := viamClient.DataClient()

binaryDataID, err := dataClient.BinaryDataCaptureUpload(

ctx,

image,

partID,

"camera",

cameraName,

"GetImage",

".jpg",

&app.BinaryDataCaptureUploadOptions{

DataRequestTimes: &[2]time.Time{time.Now(), time.Now()},

},

)

if err != nil {

logger.Fatal(err)

}

fmt.Printf("Uploaded image: %s\n", binaryDataID)

}

To capture an image and add it to your DATA page, fetch an image from your camera through your machine.

Pass that image and an appropriate set of metadata to dataClient.binaryDataCaptureUpload:

const CAMERA_NAME = "<camera-name>";

const MACHINE_ADDRESS = "<machine-address.viam.cloud>";

const API_KEY = "<api-key>";

const API_KEY_ID = "<api-key-id>";

const PART_ID = "<part-id>";

const machine = await Viam.createRobotClient({

host: MACHINE_ADDRESS,

credential: {

type: "api-key",

payload: API_KEY,

},

authEntity: API_KEY_ID,

});

const client: ViamClient = await createViamClient({

credential: {

type: "api-key",

payload: API_KEY,

},

authEntity: API_KEY_ID,

});

const dataClient = client.dataClient;

const camera = new Viam.CameraClient(machine, CAMERA_NAME);

// Capture image

const imageFrame = await camera.getImage();

// Upload binary data

const now = new Date();

const fileId = await dataClient.binaryDataCaptureUpload({

partId: PART_ID,

componentType: "camera",

componentName: CAMERA_NAME,

methodName: "GetImage",

dataRequestTimes: [now, now],

fileExtension: ".jpg",

binaryData: imageFrame,

});

// Cleanup

await machine.disconnect();

dataClient.close();

To capture an image and add it to your DATA page, fetch an image from your camera through your machine.

Pass that image and an appropriate set of metadata to dataClient.binaryDataCaptureUpload:

const String CAMERA_NAME = '<camera-name>';

const String MACHINE_ADDRESS = '<robot-address.viam.cloud>';

const String API_KEY = '<api-key>';

const String API_KEY_ID = '<api-key-id>';

const String PART_ID = '<part-id>';

final machine = await RobotClient.atAddress(

MACHINE_ADDRESS,

RobotClientOptions.withApiKey(

apiKey: API_KEY,

apiKeyId: API_KEY_ID,

),

);

final client = await ViamClient.withApiKey(

apiKeyId: API_KEY_ID,

apiKey: API_KEY,

);

final dataClient = client.dataClient;

final camera = Camera.fromRobot(machine, CAMERA_NAME);

// Capture image

final imageFrame = await camera.getImage();

// Upload binary data

final now = DateTime.now().toUtc();

final fileId = await dataClient.binaryDataCaptureUpload(

partId: PART_ID,

componentType: 'camera',

componentName: CAMERA_NAME,

methodName: 'GetImage',

dataRequestTimes: [now, now],

fileExtension: '.jpg',

binaryData: imageFrame,

);

// Cleanup

await robotClient.close();

dataClient.close();

Once you’ve captured enough images for training, you must annotate the images before you can use them to train a model.

To capture a large number of images for training an ML model, use the data management service to capture and sync image data from your camera.

When you sync with data management, Viam stores the images saved by capture and sync on the DATA page, but does not add the images to a dataset. To use your captured images for training, add the images to a dataset and annotate them, so you can use them to train a model.

Once you have enough images, consider disabling data capture to avoid incurring fees for capturing large amounts of training data.

You can either manually add annotations through the Viam web UI, or add annotations with an existing ML model.

You must annotate images in order to train an ML model on them. Viam supports two ways to annotate an image:

Use tags to add metadata about an entire image, for example if the quality of a manufacturing output is good or bad.

If you have an ML model, use code to speed up annotating your data, otherwise use the Web UI.

The DATA page provides an interface for annotating images.

To tag an image:

Click on an image, then click the + next to the Tags option.

Add one or more tags to your image.

Repeat these steps for all images in the dataset.

Use an ML model to generate tags for an image or set of images.

Then, pass the tags and image IDs to data_client.add_tags_to_binary_data_by_ids:

import asyncio

from viam.rpc.dial import DialOptions, Credentials

from viam.app.viam_client import ViamClient

from viam.media.video import ViamImage

from viam.robot.client import RobotClient

from viam.services.vision import VisionClient

from grpclib.exceptions import GRPCError

# Configuration constants – replace with your actual values

API_KEY = "" # API key, find or create in your organization settings

API_KEY_ID = "" # API key ID, find or create in your organization settings

MACHINE_ADDRESS = "" # the address of the machine you want to capture images from

CLASSIFIER_NAME = "" # the name of the classifier you want to use

BINARY_DATA_ID = "" # the ID of the image you want to label

async def connect() -> ViamClient:

"""Establish a connection to the Viam client using API credentials."""

dial_options = DialOptions(

credentials=Credentials(

type="api-key",

payload=API_KEY,

),

auth_entity=API_KEY_ID

)

return await ViamClient.create_from_dial_options(dial_options)

async def connect_machine() -> RobotClient:

"""Establish a connection to the robot using the robot address."""

machine_opts = RobotClient.Options.with_api_key(

api_key=API_KEY,

api_key_id=API_KEY_ID

)

return await RobotClient.at_address(MACHINE_ADDRESS, machine_opts)

async def main() -> int:

viam_client = await connect()

data_client = viam_client.data_client

machine = await connect_machine()

classifier = VisionClient.from_robot(machine, CLASSIFIER_NAME)

# Get image from data in Viam

data = await data_client.binary_data_by_ids([BINARY_DATA_ID])

binary_data = data[0]

# Convert binary data to ViamImage

image = ViamImage(binary_data.binary, binary_data.metadata.capture_metadata.mime_type)

# Get tags using the image

tags = await classifier.get_classifications(image=image, image_format=binary_data.metadata.capture_metadata.mime_type, count=2)

if not len(tags):

print("No tags found")

return 1

else:

for tag in tags:

await data_client.add_tags_to_binary_data_by_ids(

tags=[tag.class_name],

binary_ids=[BINARY_DATA_ID]

)

print(f"Added tag to image: {tag}")

viam_client.close()

await machine.close()

return 0

if __name__ == "__main__":

asyncio.run(main())

Use an ML model to generate tags for an image or set of images.

Then, pass the tags and image IDs to DataClient.AddTagsToBinaryDataByIDs:

package main

import (

"context"

"fmt"

"image/jpeg"

"bytes"

"go.viam.com/rdk/app"

"go.viam.com/rdk/logging"

"go.viam.com/rdk/robot/client"

"go.viam.com/rdk/services/vision"

"go.viam.com/utils/rpc"

)

func main() {

apiKey := ""

apiKeyID := ""

machineAddress := ""

classifierName := ""

binaryDataID := ""

logger := logging.NewDebugLogger("client")

ctx := context.Background()

viamClient, err := app.CreateViamClientWithAPIKey(

ctx, app.Options{}, apiKey, apiKeyID, logger)

if err != nil {

logger.Fatal(err)

}

defer viamClient.Close()

machine, err := client.New(

context.Background(),

machineAddress,

logger,

client.WithDialOptions(rpc.WithEntityCredentials(

apiKeyID,

rpc.Credentials{

Type: rpc.CredentialsTypeAPIKey,

Payload: apiKey,

})),

)

if err != nil {

logger.Fatal(err)

}

dataClient := viamClient.DataClient()

data, err := dataClient.BinaryDataByIDs(ctx, []string{binaryDataID})

if err != nil {

logger.Fatal(err)

}

binaryData := data[0]

// Convert binary data to image.Image

img, err := jpeg.Decode(bytes.NewReader(binaryData.Binary))

if err != nil {

logger.Fatal(err)

}

// Get classifications using the image

classifier, err := vision.FromRobot(machine, classifierName)

if err != nil {

logger.Fatal(err)

}

classifications, err := classifier.Classifications(ctx, img, 2, nil)

if err != nil {

logger.Fatal(err)

}

if len(classifications) == 0 {

logger.Fatal(err)

} else {

for _, classification := range classifications {

err := dataClient.AddTagsToBinaryDataByIDs(ctx, []string{classification.Label()}, []string{binaryDataID})

if err != nil {

logger.Fatal(err)

}

fmt.Printf("Added tag to image: %s\n", classification.Label())

}

}

}

Use an ML model to generate tags for an image or set of images.

Then, pass the tags and image IDs to dataClient.addTagsToBinaryDataByIds:

const client = await createViamClient();

const myDetector = new VisionClient(client, "<detector_name>");

const dataClient = client.dataClient;

// Get the captured data for a camera

const result = await myDetector.captureAllFromCamera("<camera_name>", {

returnImage: true,

returnDetections: true,

});

const image = result.image;

const detections = result.detections;

const tags = ["tag1", "tag2"];

const myFilter = createFilter({

componentName: "camera-1",

organizationIds: ["<org-id>"],

});

const binaryResult = await dataClient.binaryDataByFilter({

filter: myFilter,

limit: 20,

includeBinaryData: false,

});

const myIds: string[] = [];

for (const obj of binaryResult.binaryMetadata) {

myIds.push(obj.metadata.binaryDataId);

}

await dataClient.addTagsToBinaryDataByIds(tags, myIds);

Use an ML model to generate tags for an image or set of images.

Then, pass the tags and image IDs to dataClient.addTagsToBinaryDataByIds:

final viamClient = await ViamClient.connect();

final myDetector = VisionClient.fromRobot(viamClient, "<detector_name>");

final dataClient = viamClient.dataClient;

// Get the captured data for a camera

final result = await myDetector.captureAllFromCamera(

"<camera_name>",

returnImage: true,

returnDetections: true,

);

final image = result.image;

final detections = result.detections;

final tags = ["tag1", "tag2"];

final myFilter = createFilter(

componentName: "camera-1",

organizationIds: ["<org-id>"],

);

final binaryResult = await dataClient.binaryDataByFilter(

filter: myFilter,

limit: 20,

includeBinaryData: false,

);

final myIds = <String>[];

for (final obj in binaryResult.binaryMetadata) {

myIds.add(obj.metadata.binaryDataId);

}

await dataClient.addTagsToBinaryDataByIds(tags, myIds);

Once you’ve annotated your dataset, you can train an ML model to make inferences.

Use labels to add metadata about objects within an image, for example by drawing bounding boxes around each bicycle in a street scene and adding the bicycle label.

If you have an ML model, use code to speed up annotating your data, otherwise use the Web UI.

The DATA page provides an interface for annotating images.

To label an object with a bounding box:

Click on an image, then click the Annotate button in right side menu.

Choose an existing label or create a new label.

Holding the command key (on macOS), or the control key (on Linux and Windows), click and drag on the image to create the bounding box:

Once created, you can move, resize, or delete the bounding box.

Repeat these steps for all images in the dataset.

Use an ML model to generate bounding boxes for an image.

Then, separately pass each bounding box and the image ID to data_client.add_bounding_box_to_image_by_id:

import asyncio

from viam.rpc.dial import DialOptions, Credentials

from viam.app.viam_client import ViamClient

from viam.media.video import ViamImage

from viam.robot.client import RobotClient

from viam.services.vision import VisionClient

from grpclib.exceptions import GRPCError

# Configuration constants – replace with your actual values

API_KEY = "" # API key, find or create in your organization settings

API_KEY_ID = "" # API key ID, find or create in your organization settings

MACHINE_ADDRESS = "" # the address of the machine you want to capture images from

DETECTOR_NAME = "" # the name of the detector you want to use

BINARY_DATA_ID = "" # the ID of the image you want to label

async def connect() -> ViamClient:

"""Establish a connection to the Viam client using API credentials."""

dial_options = DialOptions(

credentials=Credentials(

type="api-key",

payload=API_KEY,

),

auth_entity=API_KEY_ID

)

return await ViamClient.create_from_dial_options(dial_options)

async def connect_machine() -> RobotClient:

"""Establish a connection to the robot using the robot address."""

machine_opts = RobotClient.Options.with_api_key(

api_key=API_KEY,

api_key_id=API_KEY_ID

)

return await RobotClient.at_address(MACHINE_ADDRESS, machine_opts)

async def main() -> int:

viam_client = await connect()

data_client = viam_client.data_client

machine = await connect_machine()

detector = VisionClient.from_robot(machine, DETECTOR_NAME)

# Get image from data in Viam

data = await data_client.binary_data_by_ids([BINARY_DATA_ID])

binary_data = data[0]

# Convert binary data to ViamImage

image = ViamImage(binary_data.binary, binary_data.metadata.capture_metadata.mime_type)

# Get detections using the image

detections = await detector.get_detections(

image=image, image_format=binary_data.metadata.capture_metadata.mime_type)

if not len(detections):

print("No detections found")

return 1

else:

for detection in detections:

# Ensure bounding box is big enough to be useful

if detection.x_max_normalized - detection.x_min_normalized <= 0.01 or \

detection.y_max_normalized - detection.y_min_normalized <= 0.01:

continue

bbox_id = await data_client.add_bounding_box_to_image_by_id(

binary_id=BINARY_DATA_ID,

label=detection.class_name,

x_min_normalized=detection.x_min_normalized,

y_min_normalized=detection.y_min_normalized,

x_max_normalized=detection.x_max_normalized,

y_max_normalized=detection.y_max_normalized

)

print(f"Added bounding box to image: {bbox_id}")

viam_client.close()

await machine.close()

return 0

if __name__ == "__main__":

asyncio.run(main())

Use an ML model to generate bounding boxes for an image.

Then, separately pass each bounding box and the image ID to DataClient.AddBoundingBoxToImageByID:

package main

import (

"context"

"fmt"

"image/jpeg"

"bytes"

"go.viam.com/rdk/app"

"go.viam.com/rdk/logging"

"go.viam.com/rdk/robot/client"

"go.viam.com/rdk/services/vision"

"go.viam.com/utils/rpc"

)

func main() {

apiKey := ""

apiKeyID := ""

machineAddress := ""

detectorName := ""

binaryDataID := ""

logger := logging.NewDebugLogger("client")

ctx := context.Background()

viamClient, err := app.CreateViamClientWithAPIKey(

ctx, app.Options{}, apiKey, apiKeyID, logger)

if err != nil {

logger.Fatal(err)

}

defer viamClient.Close()

machine, err := client.New(

context.Background(),

machineAddress,

logger,

client.WithDialOptions(rpc.WithEntityCredentials(

apiKeyID,

rpc.Credentials{

Type: rpc.CredentialsTypeAPIKey,

Payload: apiKey,

})),

)

if err != nil {

logger.Fatal(err)

}

dataClient := viamClient.DataClient()

detector, err := vision.FromRobot(machine, detectorName)

if err != nil {

logger.Fatal(err)

}

data, err := dataClient.BinaryDataByIDs(ctx, []string{binaryDataID})

if err != nil {

logger.Fatal(err)

}

binaryData := data[0]

// Convert binary data to image.Image

img, err := jpeg.Decode(bytes.NewReader(binaryData.Binary))

if err != nil {

logger.Fatal(err)

}

// Get detections using the image

detections, err := detector.Detections(ctx, img, nil)

if err != nil {

logger.Fatal(err)

}

if len(detections) == 0 {

logger.Fatal(err)

} else {

for _, detection := range detections {

// Ensure bounding box is big enough to be useful

if float64(detection.NormalizedBoundingBox()[2]-detection.NormalizedBoundingBox()[0]) <= 0.01 ||

float64(detection.NormalizedBoundingBox()[3]-detection.NormalizedBoundingBox()[1]) <= 0.01 {

continue

}

bboxID, err := dataClient.AddBoundingBoxToImageByID(

ctx,

binaryDataID,

detection.Label(),

float64(detection.NormalizedBoundingBox()[0]),

float64(detection.NormalizedBoundingBox()[1]),

float64(detection.NormalizedBoundingBox()[2]),

float64(detection.NormalizedBoundingBox()[3]),

)

if err != nil {

logger.Fatal(err)

}

fmt.Printf("Added bounding box to image: %s\n", bboxID)

}

}

}

Use an ML model to generate bounding boxes for an image.

Then, separately pass each bounding box and the image ID to dataClient.addBoundingBoxToImageById:

const client = await createViamClient();

const myDetector = new VisionClient(client, "<detector_name>");

const dataClient = client.dataClient;

// Get the captured data for a camera

const result = await myDetector.captureAllFromCamera("<camera_name>", {

returnImage: true,

returnDetections: true,

});

const image = result.image;

const detections = result.detections;

// Process each detection and add bounding boxes

for (const detection of detections) {

const bboxId = await dataClient.addBoundingBoxToImageById({

binaryId: "<YOUR-BINARY-DATA-ID>",

label: detection.className,

xMinNormalized: detection.boundingBox.xMin,

yMinNormalized: detection.boundingBox.yMin,

xMaxNormalized: detection.boundingBox.xMax,

yMaxNormalized: detection.boundingBox.yMax,

});

console.log(

`Added bounding box ID: ${bboxId} for detection: ${detection.className}`,

);

}

Use an ML model to generate bounding boxes for an image.

Then, separately pass each bounding box and the image ID to dataClient.addBoundingBoxToImageById:

final viamClient = await ViamClient.connect();

final myDetector = VisionClient.fromRobot(viamClient, "<detector_name>");

final dataClient = viamClient.dataClient;

// Get the captured data for a camera

final result = await myDetector.captureAllFromCamera(

"<camera_name>",

returnImage: true,

returnDetections: true,

);

final image = result.image;

final detections = result.detections;

// Process each detection and add bounding boxes

for (final detection in detections) {

final bboxId = await dataClient.addBoundingBoxToImageById(

binaryId: "<YOUR-BINARY-DATA-ID>",

label: detection.className,

xMinNormalized: detection.boundingBox.xMin,

yMinNormalized: detection.boundingBox.yMin,

xMaxNormalized: detection.boundingBox.xMax,

yMaxNormalized: detection.boundingBox.yMax,

);

print('Added bounding box ID: $bboxId for detection: ${detection.className}');

}

Once you’ve annotated your dataset, you can train an ML model to make inferences.

The following example demonstrates how you can capture an image, use an ML model to generate annotations, and then add the image to a dataset. You can use this logic to expand and improve your datasets continuously over time. Check the annotation accuracy in the DATA tab, then re-train your ML model on the improved dataset to improve the ML model.

import asyncio

import time

from datetime import datetime

from viam.rpc.dial import DialOptions, Credentials

from viam.app.viam_client import ViamClient

from viam.components.camera import Camera

from viam.media.video import ViamImage

from viam.robot.client import RobotClient

from viam.services.vision import VisionClient

# Configuration constants – replace with your actual values

API_KEY = "" # API key, find or create in your organization settings

API_KEY_ID = "" # API key ID, find or create in your organization settings

DATASET_ID = "" # the ID of the dataset you want to add the image to

MACHINE_ADDRESS = "" # the address of the machine you want to capture images from

CLASSIFIER_NAME = "" # the name of the classifier you want to use

CAMERA_NAME = "" # the name of the camera you want to capture images from

PART_ID = "" # the part ID of the binary data you want to add to the dataset

async def connect() -> ViamClient:

"""Establish a connection to the Viam client using API credentials."""

dial_options = DialOptions(

credentials=Credentials(

type="api-key",

payload=API_KEY,

),

auth_entity=API_KEY_ID

)

return await ViamClient.create_from_dial_options(dial_options)

async def connect_machine() -> RobotClient:

"""Establish a connection to the robot using the robot address."""

machine_opts = RobotClient.Options.with_api_key(

api_key=API_KEY,

api_key_id=API_KEY_ID

)

return await RobotClient.at_address(MACHINE_ADDRESS, machine_opts)

async def main() -> int:

viam_client = await connect()

data_client = viam_client.data_client

machine = await connect_machine()

camera = Camera.from_robot(machine, CAMERA_NAME)

classifier = VisionClient.from_robot(machine, CLASSIFIER_NAME)

# Capture image

image_frame = await camera.get_image()

# Upload data

file_id = await data_client.binary_data_capture_upload(

part_id=PART_ID,

component_type="camera",

component_name=CAMERA_NAME,

method_name="GetImage",

data_request_times=[datetime.utcnow(), datetime.utcnow()],

file_extension=".jpg",

binary_data=image_frame.data

)

print(f"Uploaded image: {file_id}")

# Annotate image

await data_client.add_tags_to_binary_data_by_ids(

tags=["test"],

binary_ids=[file_id]

)

# Get image from data in Viam

data = await data_client.binary_data_by_ids([file_id])

binary_data = data[0]

# Convert binary data to ViamImage

image = ViamImage(binary_data.binary, binary_data.metadata.capture_metadata.mime_type)

# Get tags using the image

tags = await classifier.get_classifications(

image=image, image_format=binary_data.metadata.capture_metadata.mime_type, count=2)

if not len(tags):

print("No tags found")

return 1

for tag in tags:

await data_client.add_tags_to_binary_data_by_ids(

tags=[tag.class_name],

binary_ids=[file_id]

)

print(f"Added tag to image: {tag}")

print("Adding image to dataset...")

await data_client.add_binary_data_to_dataset_by_ids(

binary_ids=[file_id],

dataset_id=DATASET_ID

)

print(f"Added image to dataset: {file_id}")

viam_client.close()

await machine.close()

return 0

if __name__ == "__main__":

asyncio.run(main())

package main

import (

"context"

"fmt"

"time"

"image/jpeg"

"bytes"

"go.viam.com/rdk/app"

"go.viam.com/rdk/logging"

"go.viam.com/rdk/robot/client"

"go.viam.com/rdk/services/vision"

"go.viam.com/rdk/components/camera"

"go.viam.com/rdk/utils"

"go.viam.com/utils/rpc"

)

func main() {

apiKey := ""

apiKeyID := ""

datasetID := ""

machineAddress := ""

classifierName := ""

cameraName := ""

partID := ""

logger := logging.NewDebugLogger("client")

ctx := context.Background()

viamClient, err := app.CreateViamClientWithAPIKey(

ctx, app.Options{}, apiKey, apiKeyID, logger)

machine, err := client.New(

context.Background(),

machineAddress,

logger,

client.WithDialOptions(rpc.WithEntityCredentials(

apiKeyID,

rpc.Credentials{

Type: rpc.CredentialsTypeAPIKey,

Payload: apiKey,

})),

)

if err != nil {

logger.Fatal(err)

}

if err != nil {

logger.Fatal(err)

}

defer viamClient.Close()

// Capture image from camera

cam, err := camera.FromRobot(machine, cameraName)

if err != nil {

logger.Fatal(err)

}

image, _, err := cam.Image(ctx, utils.MimeTypeJPEG, nil)

if err != nil {

logger.Fatal(err)

}

dataClient := viamClient.DataClient()

// Upload image to Viam

binaryDataID, err := dataClient.BinaryDataCaptureUpload(

ctx,

image,

partID,

"camera",

cameraName,

"GetImage",

".jpg",

&app.BinaryDataCaptureUploadOptions{

DataRequestTimes: &[2]time.Time{time.Now(), time.Now()},

},

)

fmt.Printf("Uploaded image: %s\n", binaryDataID)

// Convert binary data to image.Image

img, err := jpeg.Decode(bytes.NewReader(image))

if err != nil {

logger.Fatal(err)

}

// Get classifications using the image

classifier, err := vision.FromRobot(machine, classifierName)

if err != nil {

logger.Fatal(err)

}

classifications, err := classifier.Classifications(ctx, img, 2, nil)

if err != nil {

logger.Fatal(err)

}

if len(classifications) == 0 {

logger.Fatal(err)

} else {

for _, classification := range classifications {

err := dataClient.AddTagsToBinaryDataByIDs(ctx, []string{classification.Label()}, []string{binaryDataID})

if err != nil {

logger.Fatal(err)

}

fmt.Printf("Added tag to image: %s\n", classification.Label())

}

}

// Add image to dataset

err = dataClient.AddBinaryDataToDatasetByIDs(ctx, []string{binaryDataID}, datasetID)

if err != nil {

logger.Fatal(err)

}

fmt.Printf("Added image to dataset: %s\n", binaryDataID)

}

Was this page helpful?

Glad to hear it! If you have any other feedback please let us know:

We're sorry about that. To help us improve, please tell us what we can do better:

Thank you!